TestingPod faced the challenge of publishing quality content with a team of freelance writers from the software testing community.

They wanted to keep the content team small, because the blog was initially their way of giving back to the software testing community. By offering paid writing contributions, anyone could share unique insights on a any software testing topic and get paid.

Although they’ve been able to filter out low-effort AI-written submissions, they still face the challenge of reviewing and editing content from the community, as most people were testers first and writers second.

Meaning they had interesting insights to share, but their content required significant edits to be publish-ready. We knew the only way to grow the publication with a small team was if we used AI, which brought us to creating AI assistants.

Creating Claude Assistants

To start getting results immediately, we created AI assistants or projects in Claude that could help us complete different review activities quickly.

For instance, one assistant we created was a feedback assistant that turned any rough feedback on an article into clear and constructive feedback for the authors. This meant that the team didn’t need to spend time crafting feedback as they reviewed content.

The assistant saved the team time. However, it still wasn’t autonomous. It still required significant oversight from the team, and it still wasn’t reaching the scale we wanted TestingPod to reach.

We believed that to truly scale TestingPod and still make it cost-effective to run, we had to create a truly autonomous system where writers would get instant feedback, reducing the errors that would get to the editors.

This led us to build VectorLint, a command-line tool that guides writers towards their desired quality standards through AI-automated evaluations.

VectorLint CLI Tool

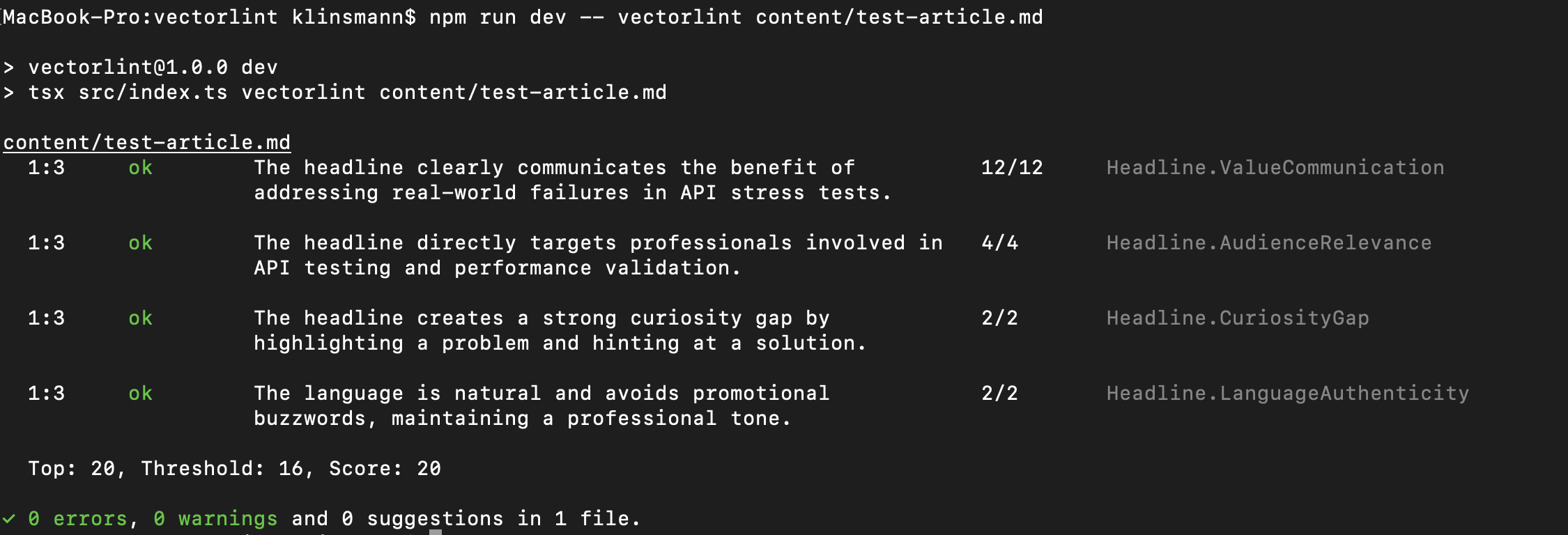

VectorLint is a command-line tool that runs content against carefully engineered content evaluation prompts.

It provides a score to its user as well as suggestions, enabling them to adjust their content to meet an acceptable quality standard or score.

As a command-line tool, it means it can be run in a CI/CD pipeline in GitHub, meaning that writers can get instant feedback on their content whenever they submit their articles. Instead of submitting drafts and waiting days for feedback, they get instant evaluation against TestingPod’s specific standards.

It could also be run locally, enabling writers to fix issues before submission.

What This Means For TestingPod

For TestingPod, this means they can scale their technical content strategy without increasing team overhead.

VectorLint makes it possible for a small team to maintain consistent quality standards across all contributors. Just turn style guides into evaluation prompts, and every contributor gets consistent feedback on their content.

And with fewer issues getting to the content team, it frees them up to focus on other priorities like distribution and community engagement.

Automated quality compliance ensures that every piece of content meets TestingPod’s standards, regardless of who wrote it.

The Vision: Giving Any Tester The Platform to Share Their Voice.

Our ongoing goal is to expand the evaluation prompt library continuously.

As the content team identifies new issues during reviews, we capture them and convert them into automated evaluation prompts. Over time, this creates a completely autonomous review system that enables anyone with valuable testing insights to share their knowledge, regardless of their writing ability.

TestingPod will truly become the hub where testers can share their unique experiences.